* move docs -> source * Add files via upload * mv image * Add files via upload * colocate iOS setup doc * delete image * Add files via upload * fix * delete image * Add files via upload * Update developer_cookbook.md * toctree * wip subfolder * docs update * subfolder * updates * name * updates * index * updates * refactor structure * depth * docs * content * docs * getting started * distributions * fireworks * fireworks * update * theme * theme * theme * pdj theme * pytorch theme * css * theme * agents example * format * index * headers * copy button * test tabs * test tabs * fix * tabs * tab * tabs * sphinx_design * quick start commands * size * width * css * css * download models * asthetic fix * tab format * update * css * width * css * docs * tab based * tab * tabs * docs * style * image * css * color * typo * update docs * missing links * list templates * links * links update * troubleshooting * fix * distributions * docs * fix table * kill llamastack-local-gpu/cpu * Update index.md * Update index.md * mv ios_setup.md * Update ios_setup.md * Add remote_or_local.gif * Update ios_setup.md * release notes * typos * Add ios_setup to index * nav bar * hide torctree * ios image * links update * rename * rename * docs * rename * links * distributions * distributions * distributions * distributions * remove release * remote --------- Co-authored-by: dltn <6599399+dltn@users.noreply.github.com> Co-authored-by: Ashwin Bharambe <ashwin.bharambe@gmail.com>

12 KiB

CLI Reference

The llama CLI tool helps you setup and use the Llama Stack. It should be available on your path after installing the llama-stack package.

Installation

You have two ways to install Llama Stack:

-

Install as a package: You can install the repository directly from PyPI by running the following command:

pip install llama-stack -

Install from source: If you prefer to install from the source code, follow these steps:

mkdir -p ~/local cd ~/local git clone git@github.com:meta-llama/llama-stack.git conda create -n myenv python=3.10 conda activate myenv cd llama-stack $CONDA_PREFIX/bin/pip install -e .

llama subcommands

download:llamacli tools supports downloading the model from Meta or Hugging Face.model: Lists available models and their properties.stack: Allows you to build and run a Llama Stack server. You can read more about this here.

Sample Usage

llama --help

usage: llama [-h] {download,model,stack} ...

Welcome to the Llama CLI

options:

-h, --help show this help message and exit

subcommands:

{download,model,stack}

Downloading models

You first need to have models downloaded locally.

To download any model you need the Model Descriptor. This can be obtained by running the command

llama model list

You should see a table like this:

+----------------------------------+------------------------------------------+----------------+

| Model Descriptor | Hugging Face Repo | Context Length |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-8B | meta-llama/Llama-3.1-8B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-70B | meta-llama/Llama-3.1-70B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-405B:bf16-mp8 | meta-llama/Llama-3.1-405B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-405B | meta-llama/Llama-3.1-405B-FP8 | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-405B:bf16-mp16 | meta-llama/Llama-3.1-405B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-8B-Instruct | meta-llama/Llama-3.1-8B-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-70B-Instruct | meta-llama/Llama-3.1-70B-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-405B-Instruct:bf16-mp8 | meta-llama/Llama-3.1-405B-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-405B-Instruct | meta-llama/Llama-3.1-405B-Instruct-FP8 | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.1-405B-Instruct:bf16-mp16 | meta-llama/Llama-3.1-405B-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-1B | meta-llama/Llama-3.2-1B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-3B | meta-llama/Llama-3.2-3B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-11B-Vision | meta-llama/Llama-3.2-11B-Vision | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-90B-Vision | meta-llama/Llama-3.2-90B-Vision | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-1B-Instruct | meta-llama/Llama-3.2-1B-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-3B-Instruct | meta-llama/Llama-3.2-3B-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-11B-Vision-Instruct | meta-llama/Llama-3.2-11B-Vision-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama3.2-90B-Vision-Instruct | meta-llama/Llama-3.2-90B-Vision-Instruct | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama-Guard-3-11B-Vision | meta-llama/Llama-Guard-3-11B-Vision | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama-Guard-3-1B:int4-mp1 | meta-llama/Llama-Guard-3-1B-INT4 | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama-Guard-3-1B | meta-llama/Llama-Guard-3-1B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama-Guard-3-8B | meta-llama/Llama-Guard-3-8B | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama-Guard-3-8B:int8-mp1 | meta-llama/Llama-Guard-3-8B-INT8 | 128K |

+----------------------------------+------------------------------------------+----------------+

| Prompt-Guard-86M | meta-llama/Prompt-Guard-86M | 128K |

+----------------------------------+------------------------------------------+----------------+

| Llama-Guard-2-8B | meta-llama/Llama-Guard-2-8B | 4K |

+----------------------------------+------------------------------------------+----------------+

To download models, you can use the llama download command.

Downloading from Meta

Here is an example download command to get the 3B-Instruct/11B-Vision-Instruct model. You will need META_URL which can be obtained from here

Download the required checkpoints using the following commands:

# download the 8B model, this can be run on a single GPU

llama download --source meta --model-id Llama3.2-3B-Instruct --meta-url META_URL

# you can also get the 70B model, this will require 8 GPUs however

llama download --source meta --model-id Llama3.2-11B-Vision-Instruct --meta-url META_URL

# llama-agents have safety enabled by default. For this, you will need

# safety models -- Llama-Guard and Prompt-Guard

llama download --source meta --model-id Prompt-Guard-86M --meta-url META_URL

llama download --source meta --model-id Llama-Guard-3-1B --meta-url META_URL

Downloading from Hugging Face

Essentially, the same commands above work, just replace --source meta with --source huggingface.

llama download --source huggingface --model-id Llama3.1-8B-Instruct --hf-token <HF_TOKEN>

llama download --source huggingface --model-id Llama3.1-70B-Instruct --hf-token <HF_TOKEN>

llama download --source huggingface --model-id Llama-Guard-3-1B --ignore-patterns *original*

llama download --source huggingface --model-id Prompt-Guard-86M --ignore-patterns *original*

Important: Set your environment variable HF_TOKEN or pass in --hf-token to the command to validate your access. You can find your token at https://huggingface.co/settings/tokens.

Tip: Default for

llama downloadis to run with--ignore-patterns *.safetensorssince we use the.pthfiles in theoriginalfolder. For Llama Guard and Prompt Guard, however, we need safetensors. Hence, please run with--ignore-patterns originalso that safetensors are downloaded and.pthfiles are ignored.

Understand the models

The llama model command helps you explore the model’s interface.

download: Download the model from different sources. (meta, huggingface)list: Lists all the models available for download with hardware requirements to deploy the models.prompt-format: Show llama model message formats.describe: Describes all the properties of the model.

Sample Usage

llama model <subcommand> <options>

llama model --help

usage: llama model [-h] {download,list,prompt-format,describe} ...

Work with llama models

options:

-h, --help show this help message and exit

model_subcommands:

{download,list,prompt-format,describe}

You can use the describe command to know more about a model:

llama model describe -m Llama3.2-3B-Instruct

Describe

+-----------------------------+----------------------------------+

| Model | Llama3.2-3B-Instruct |

+-----------------------------+----------------------------------+

| Hugging Face ID | meta-llama/Llama-3.2-3B-Instruct |

+-----------------------------+----------------------------------+

| Description | Llama 3.2 3b instruct model |

+-----------------------------+----------------------------------+

| Context Length | 128K tokens |

+-----------------------------+----------------------------------+

| Weights format | bf16 |

+-----------------------------+----------------------------------+

| Model params.json | { |

| | "dim": 3072, |

| | "n_layers": 28, |

| | "n_heads": 24, |

| | "n_kv_heads": 8, |

| | "vocab_size": 128256, |

| | "ffn_dim_multiplier": 1.0, |

| | "multiple_of": 256, |

| | "norm_eps": 1e-05, |

| | "rope_theta": 500000.0, |

| | "use_scaled_rope": true |

| | } |

+-----------------------------+----------------------------------+

| Recommended sampling params | { |

| | "strategy": "top_p", |

| | "temperature": 1.0, |

| | "top_p": 0.9, |

| | "top_k": 0 |

| | } |

+-----------------------------+----------------------------------+

Prompt Format

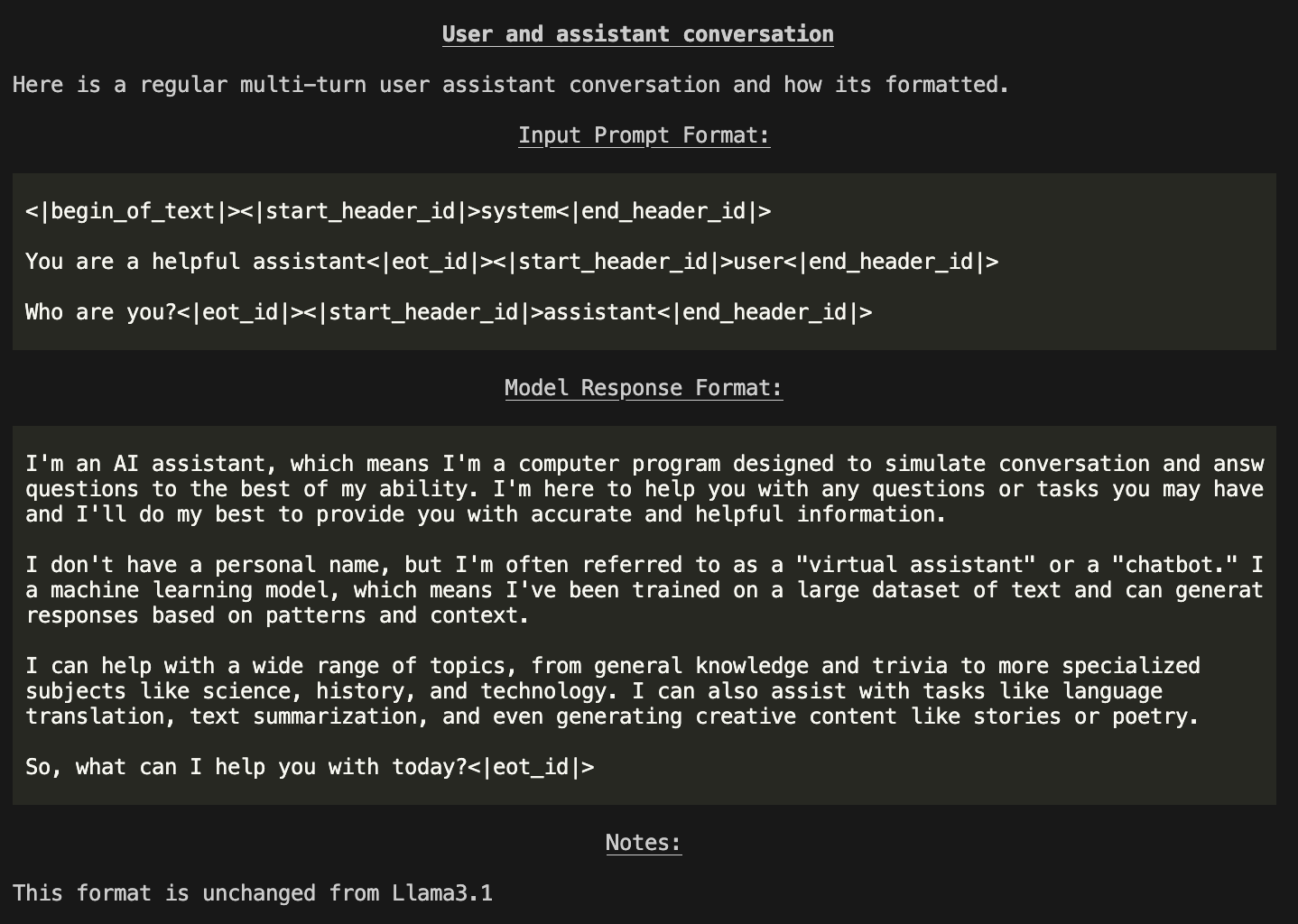

You can even run llama model prompt-format see all of the templates and their tokens:

llama model prompt-format -m Llama3.2-3B-Instruct

You will be shown a Markdown formatted description of the model interface and how prompts / messages are formatted for various scenarios.

NOTE: Outputs in terminal are color printed to show special tokens.